Is the Robotics Revolution Imminent?

History of Robotics, Inflection Points, Scaling Intelligence and Areas of Opportunity

🙋🏻♂️ A Short Preface

Hi there, glad you’re interested in the 7th edition of Deep Tech Demystified! This edition is a deep-dive into one of my favourite topics, robotics. Robotics is one of the verticals that reached extremely fast consensus stage in the venture world, while - slightly exaggerating - being declared nearly dead few years prior. This article is my attempt to cut through all the hype present in the market and to find a reasonable thesis from the perspective of a technical early-stage venture investor. To paint a complete picture, I will briefly look into the history of robotics and why it only scaled linearly over the last 50 years. Then, I will dive deeper into the converging factors and inflection points that lead to the current sentiment about our mechanical overlords and thoroughly argue for and against LLM-like scaling of robot intelligence towards general purpose robots. To wrap things up, I shed light on the areas of opportunity I’m excited about and what my thoughts are on humanoids as a mode of robotics. Apologies in advance for the slightly larger than usual word count, but this one really matters to me. Feel free to skip to the parts that are of interest to you in the agenda below, or for the ones on desktop on the left!

📋 Table of Contents

🤖 A Brief History of Robotics

Robots have already been in use since the early 60s, starting with the automotive industry at General Motors and George Devol’s Unimate, the first industrial robot. This mechanical arm was, although reprogrammable, highly specialized for tasks in die casting - a metal casting process that injects molten metal into a form - used for example for engine blocks. Kawasaki also deployed the Unimate for welding tasks, replacing the work of 20 human welders per robot. Soon enough the US and Japan did transform into the industrial robot strongholds in the world. In parallel to this development between 1966 and 1972, the Artificial Intelligence Center in Stanford did research on a more advanced and also mobile robotics platform, called Shakey, or the “first electronic person,” as referred to by Life magazine in 1970. This platform was the first approach to route planning and finding, as well as rearranging of simple objects, significantly influencing modern robotics and AI techniques of today.

Besides the pure hardware, also software slowly but surely emerged from research, moved into industry and evolved from specific robot programs to general purpose robot languages, with early versions developed in the 70s at MIT. For a more in-depth read about the evolution of robotic software, take a look here. These early hours of robotics were not only limited to industrial and research settings, but oddly enough also the consumer market. The first consumer robot already launched in 1958 and was a pool cleaner robot, the Roomba-of-the-seas if you will.

Although this early history might read like robotics was already close to a mainstream phenomenon in these days, with breakthrough after breakthrough, this is not the case. Progress was largely driven by a niche enthusiast- and research-community through hackathons and competitions, like the DARPA challenges, paralleling the developments of the early days of the computer, AI and many other technologies. One of these early projects with lasting impact was the Robot Operating System (ROS), originally developed by Stanford students in 2006, who were tired of continuously re-implementing software infrastructure for robotics with every new project. ROS evolved from this starting point and is now widespread in robotics.

Nonetheless, most robotic applications were still only academically intriguing and not commercially interesting, due to a lack of maturity and a missing economic business case. Although, we’ve already seen some level of success in robotic applications, e.g. with the $775M acquisition of Kiva Systems by Amazon, to run their order fulfillment, adoption of robotics overall happened only in a linear manner, missing the inflection point we’ve seen, for example, with personal computers. The cases robotics solved to date are very specific, because the problem space is just so incredibly complicated with a myriad of edge cases, making constraints necessary for deployment. Moving from specialized to generalized systems is a common theme in new technologies, so no surprise here. To stay with the computer analogy from above, one could argue, that we are, or have been in the computer mainframe stage in robotics and are on the verge to evolve towards the general purpose personal computers. But before we start the discussion if robotics might bridge the gap between specialized to generalized systems, let’s have a look why robotics in general did not scale yet.

🧗🏻 Historical Scaling Constraints

To put it very simple, it just wasn’t worth it in most cases. In theory it makes a lot of sense to automate labour, but it doesn’t make any sense, when automation overall increases cost (and also risk). Historically, a robot system would result in round-about seven-figure upfront investment, and in most cases from unproven startups. The hardware you got was then not performant enough for complex, variable and perception-dependent tasks, so (partly) redesigns of manufacturing processes are necessary, leading to additionally integration cost. The integration itself takes weeks or months, resulting in costly downtime, if it is not a new manufacturing line that is built from scratch. When the system is then finally up and running, the inherent complexity makes it very vulnerable to errors, resulting again in frequent downtime. Even if the gained efficiency is significant, a single error could easily destroy the resulting ROI. To sum this up, historically it didn’t make much sense for most companies to introduce robots. It only made sense for highly specialized and scalable manufacturing settings, or where human labour is just not an alternative (think of extreme payloads regarding size and weight), like in automotive. Thus, it comes to no surprise that, for example, only 25% of warehouses worldwide have implemented some form of automation and only 16% of UK’s SMEs in manufacturing use robots. The number is even lower for the US with only 14%. But might this change now?

⚞ Converging Factors

There are a few external developments, as well as robotics-inherent developments taking place that might shift the robotics business case into a favorable direction, enhancing the rate of adoption. Let’s kick things off with the external inflection points.

🌍 External Inflection Points

The last few years have been very tumultuous speaking from a geopolitical and economic point of view. COVID hit, globalized supply chains collapsed and the reliance of whole industries on a few international players and regions became painfully clear. Simultaneously, western economies (finally) realized, that emerging economies like China are not emerging anymore, looking for their place in a global economy, but rather straight up rivals. Paired with a cultural clash, Russia’s war on Ukraine and tensions around Taiwan as a manufacturing powerhouse for semiconductors, voices have gotten louder and louder about the resilience and sovereignty of western economies. For the first time, western economies demand reshoring of once domestic industries, like semiconductors in the US.

But even if western economies wanted to reshore whole industries, they are simply lacking necessary labor, with no improvement of the situation in sight. And if they find enough labor to fill positions, these industries - like manufacturing - are just incredibly unattractive to work in, resulting in employee turnover rates of around 40%. In contrast to that, talent in robotics is continually increasing at a factor of 13x over the last decade, while simultaneously, large parts of robotics have shifted towards machine learning and software, opening up the space for an even larger talent base. So, speaking from an external lens, willingness and necessity to re-domesticate industries is paired with a shift of talent in favour of claiming back said industries. External inflection points seem to be setting the stage for a robotics revolution. Let’s have a look at the robotics-inherent, internal inflection points.

🦾 Internal Inflection Points - Hardware

The robotics stack changed immensely, just in the last 10 years alone. Looking at changes in hardware, we can see that no part of a robot was left untouched by progress. This starts with a sharp decline in cost for motors and actuators, as well as additional prototyping capabilities through e.g. 3D printers, that make production of robotics hardware itself not only affordable, but also improving overall development speed. Additionally, adjacent technologies limiting robotics performance in an interactive environment, leapfrogged. The power of the perception-related compute stack improved from a single TFLOP to 2000 TFLOPs within six years. The other parts necessary for robots to see and feel, like sensors and LiDAR, improved in the same manner. I can still remember my time in the Formula Student, building an autonomous race car in 2017, with a perception stack costing as much as a middle class limousine. The current iPhone is twice as powerful compared to that.

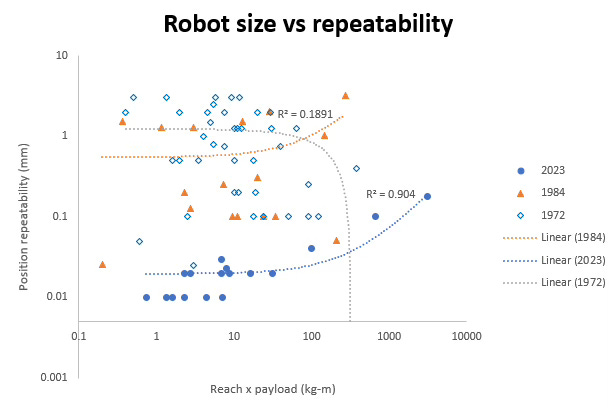

So let’s have a look at how that overall impacted the cost of robotics. There are interesting studies that aggregate robotics cost to a single curve, which is very simple to digest, but also an oversimplification of the complexity of hardware - like this study based on data from the International Federation of Robotics. Below you can have a look at a more granular view at cost for actual industrial robots that have been deployed for industrial use-cases. You can also see repeatability benchmarks.

The key take-aways from both of these diagrams are the following:

Firstly, robots are indeed a lot cheaper for the same payload they can handle, no surprise here.

Secondly, robot cost is now more strongly correlated to the actual size of the robot, reflecting the payload. This sounds counterintuitive and negative at first, but simply reflects that fix costs of robots are continually decreasing. The investment to be made depends not anymore as much on the question “robot yes or no?” but rather on the question “how large should the robot be?”. Thus, robots are now more accessible for simpler tasks than they were ever before, opening up cost competitiveness for a ton of niche use-cases.

Thirdly, looking at the repeatability, robots are 50-100 times more precise than their predecessors in the 80s, widening the problem space robots can be applied to by a large degree - problems requiring high precision and predictable quality benchmarks.

🧑🏻💻 Internal Inflection Points - Software

Let’s have a look at the second building block of robots, the software that runs them. The main innovation here is much more focused than on the hardware side of things, namely the progress in machine learning in its different forms.

LLMs and multimodal models enabled a merging of computer vision, natural language and other sensors, boosting the capabilities of robotics to interact, perceive and learn from their environment. One of the prime examples is SayCan, a model that grounds language instruction inputs in a wealth of semantic knowledge about the world, enabling robots to act contextually appropriate within their environment. Other examples are RT-2, PaLM-E, EUREKA or RFM-1 and many more. These models are also called Vision-Language-Action models (VLAMs), due to their multimodal capabilities resulting in robot action. To make these models useful for a broad range of tasks, 33 academic labs have pooled data from 22 different robot types in the Open-X Embodiment dataset to create a general-purpose robotics model RT-X, to perform robustly in novel and previously unseen tasks. Another important advance in this regard was zero-shot imitation, a technique for imitating robotic behaviour, simply from visual observations alone. The bottom line is, that it has become comparably easy and cheap to interact with robots, get them running for a variety of tasks and make good use of progressing hardware.

💾 Internal Inflection Points - Data

But all these models wouldn’t work properly without sufficient quality data to back them up. Luckily, progress didn’t stop in model architecture. One of the most promising and also scalable approaches is using simulations to create new data for robots to be trained on. These simulation techniques have their roots in the gaming industry to mimic, and to physically accurate simulate worlds. In combination with advancements in computer vision and scene understanding - like with Neural Radiance Fields (NeRF), that help us create 3D models from 2D images - it is much easier to scale up model simulations and as a result, to create high quality synthetic data. There are some examples already out there, that showcase the power of these techniques and how they can serve as basis to train robots. Take a look at the linked paper, that showcases a robot walking in San Francisco, solely being trained on simulation data and Youtube videos. There are of course other techniques besides simulation, like data collection through teleoperation of robots by humans, or learning from videos. For an exotic approach to data collection, take a look at Sensei! The short summary of this part is, that robots will be able to do much more and learn much faster, than ever before, powered by abundance of data.

🧠 Will Robotics Intelligence Scale?

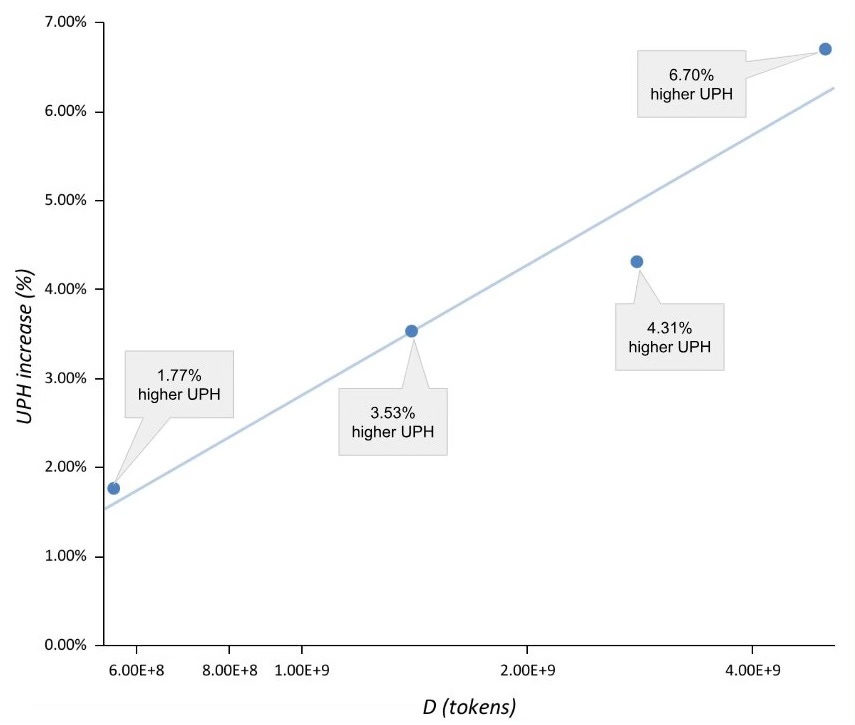

But will all of these inflection points in conjunction lead to a scaling of robot intelligence and use-case dexterity towards the holy grail of general purpose robotics - and subsequently scaling of robotics in general? Well, the recent indications from academia and industry are somewhat in favour of scaling laws for robot intelligence, essentially reporting that as long as the model size of robot foundational models (RFM), data to be trained on and compute is increased, robots can do more and are less prone to errors. Very similar to scaling laws for LLMs.

If this seemingly linear scaling behavior will continue, then robotics might have the potential to rise to the most valuable category affected by second-order effects of AI. Given that only a few of the historical startups in robotics survived in the last decade and that robotics is very compatible with venture fundraising cycles, it comes to no surprise that this vertical gets the attention it currently gets.

But is it really that simple? Do we only need to linearly extrapolate? And has contrarian technology investing really come down to betting on linear trend-lines? I don’t think so! So let’s dive a few layers deeper into arguments for and against scaling laws. You can find the original arguments in Nishant J. Kumar’s blog, one of the best resources I have found on the topic!

📈 Arguments for Robotics Scaling

Scaling and generalisation success in NLP & CV:

This is the most obvious argument. We’ve seen scaling patterns before in neural networks and datasets, most recently also in the new form of inference scaling with ChatGPT o1. In this case, The Bitter Lesson of Rich Sutton holds true again - simply throwing more powerful compute and data at simple and scalable algorithms scales well. It could already be proven, that generalisation (to some degree) can be enabled by scaling. Why not again for robotics? And the first signs are already out there, with research progress on RT-X and RT-2.

Inflections points in hardware, software and data:

As discussed above, these inflection points are inevitable and progress will continue to improve performance in hardware (i.e. compute), in software in form of models and increasingly available data, there is no way of denying this.

The manifold hypothesis:

The manifold hypothesis states that…

…many high-dimensional data sets that occur in the real world actually lie along low-dimensional latent manifolds inside that high-dimensional space.[1][2][3][4] As a consequence of the manifold hypothesis, many data sets that appear to initially require many variables to describe, can actually be described by a comparatively small number of variables, likened to the local coordinate system of the underlying manifold.

To rephrase this, it essentially means that data might seem very complex and needs a lot of variables to be described, but in reality can be very simple if you look at it from the right angle, or from a specific local point of view. Transferring this to the realm of robotics, the thesis (and hope) is, that…

the space of possible tasks we could conceive of having a robot do is impossibly large and complex, the tasks that actually occur practically in our world lie on some much lower-dimensional and simpler manifold of this space. By training a single model on large amounts of data, we might be able to discover this manifold.

The bottom line of this thesis is, that we might discover some hidden, low-dimensional layers, that drastically simply robot implementation.

Common sense as underlying mechanism for robots:

Most tasks in robotics have a single thing in common: common sense. Just think of handling and transporting a mug from A to B. It is quite common sense, how to hold a mug, otherwise its content falls out. If you drop the mug, it is also common sense to pick it up. The same is true on how to place it on a table (→ e.g. not half on the edge or on top of another mug). If you want to program a robot, all of these constraints might seem like edge cases, but are actually solved by common sense. So, if we achieve to teach LLMs common sense - might it be by a huge amount of data or novel inference scaling - we might already solve large parts of the generalisation problem.

📉 Arguments against Robotics Scaling

To keep things balanced, let’s look at the arguments against scaling.

Robotics data is still comparably scarce:

While there definitely is an inflection point regarding simulation data for robots, data itself is still a far cry from what we’re used to in computer vision, language or even video. There is no denying, that data is and will be a major bottleneck for the future.

The data flywheel is simply not there. Compare this to the other modalities, where people are constantly uploading to the internet (and also being motivated to create new data, thanks to all kinds of social media platforms). There is simply no incentive to produce vision data and robot sensory input-action pairs. Will synthetic data be enough to create our mechanical overlords? Hard to tell.

Different modes of robot hardware increase complexity:

This is a different framing of the data problem. Because of the vast differences in robot modalities, the data that is available is very heterogeneous and not really applicable for all sizes and forms robots come in. This makes the data collection challenge even harder than it already is. And if you think this argument through, it doesn’t stop at collecting data. Even if data collection is solved, can we find a common output space for these large models that fit different kinds of robots?

Variation in deployment environments:

The problem space for robots to operate in is just so incredibly diverse, ranging from factories to homes and office buildings. Making robots robustly work in only one of these settings requires an unknown amount of data and it is not sure at which point RFMs will be able to generalize enough to make it work. It might be, that solving only one of these application areas will require an impractical amount of data.

The cost of energy and compute:

This argument should come to no surprise. Given our experience how much it costs to train the likes of GPT4, it is just very (speaking in the range of $bn) expensive to create these models. Given the complexity of the robotic problem space, it is probable that such an endeavour will surpass the cost by an order of magnitude.

But even if it works as well as it did with CV & NLP…

The 99.X problem:

Most use-cases for robots - being it in manufacturing or applications at home - require 99.X accuracy or even higher, to be deployed safely and reliably. Even the best models in vision and language did not reach these levels of accuracy (yet). So even if data collection, training and models scale in robotics comparable to CV & NLP, it simply won’t be enough. Think of Tesla, having all the resources in the world and access to data, thanks to continuous data collection by their cars, and still, they are nowhere near 99.X accuracy in the task of self-driving. So it seems not far-fetched to argue, that similar approaches in robotics will face the same challenges.

Long time-horizon tasks and compounding errors:

A lot of tasks of interest for robots are tasks that have longer time horizons, as they require a sequence of actions and manipulations over time. Given the error rates in handling tasks, these errors will compound with every single action until the task cannot be fulfilled anymore.

Consider the relatively simple problem of making a cup of tea given an electric kettle, water, a box of tea bags, and a mug. Success requires pouring the water into the kettle, turning it on, then pouring the hot water into the mug, and placing a tea-bag inside it. If we want to solve this with a model trained to output motor torque commands given pixels as input, we’ll need to send torque commands to all 7 motors at around 40 Hz. Let’s suppose that this tea-making task requires 5 minutes. That requires 7 * 40 * 60 * 5 = 84000 correct torque commands. This is all just for a stationary robot arm; things get much more complicated if the robot is mobile, or has more than one arm.

If you transfer this onto the realm of NLP and language models, these compounding errors are the reason that models are really bad at producing coherent novels and long-form writing. And finally, to wrap the arguments up, some…

🖇️ Related Arguments on Robotics Scaling

Deployment safety and control mechanisms:

While we do not know as much as we want to, on why neural networks and all these models we train learn, robots still can be expected to operate safely. While it is not possible to deploy theoretical safety guarantees in these models, robot control and planning algorithms themselves are useful in certifying system safety.

Human-in-the-loop as potential solution:

Putting humans in the control loop of robots might be a useful way in mitigating some of the challenges, especially the ‘99.X problem’. This already worked in a lot of ML related use-cases, where ML enhances the capability of humans (i.e. software engineers, autocompleting their code), so why not in robotics? It definitely isn’t as straightforward, given that a robot and human interacting in the same space is potentially dangerous, but an interesting mitigating avenue to go down to, to solve at lest some of the scaling problems.

Creative ways of mitigating the data problem:

One of the potential solutions I already described above, simulations to ease the data collection problem. Potentially, realistic simulators are a scalable way of training robot policies, which can then be transferred into the real world. Other approaches are for example, using existing vision, language and video data and merge and fine-tune that with small parts of robotics data, as the RT-2 model tries to accomplish.

Combining classical and learning-based approaches:

One promising route to solve scaling, is to combine classical approaches with learning-based ones - for example to use learning for vision tasks and classical SLAM (Simultaneous Localization and Mapping) and path-planning for the rest of the robot “brain”. Take a look at the linked paper to get an impression.

📌 The Key Takeaways from these Arguments

Scaling up robot learning might not ‘solve’ robotics in every application area, but it is still a promising and valid route to go down to, to overall improve robot performance. We also might gain some interesting insights on the way. Just don’t fall into the trap of thinking, that we can simply linearly extrapolate until it’s ‘solved’.

Diversifying the approaches should be pursued. While simply scaling models and data is still a valid approach, it doesn’t make sense to ignore other potential routes, like the combination of classical and learning-based policies.

Diversifying data collection seems to be essential in getting the best ‘bang for the buck’ on training and data collection. This means going beyond your average SCARA robot and looking into mobile robots and other areas. These data points will have most impact on advancing generalisation.

Usability of robots is one of the lowest hanging fruits. Yes, robotic data has not the flywheel effects like video and language, but by keeping entry barriers as low as possible for non-experts, there might be a chance that adoption scales further and thus data being generated.

Encourage transparency of failures relating to approaches undertaken by robot researchers. I know, it is not sexy to report failures in academia and certainly won’t make life in academia easier, but the idealist in me wants to see a world, where we don’t repeat the same (resource intensive) mistakes. But changing academia will be harder than scaling robot intelligence, so no hopes here.

🎯 Areas of Opportunity

Now that we have a broad overview of the field of robotics and recent developments, let’s wrap things up with my personal outlook on the field and where I see opportunities arising, from the perspective of an early stage tech-investor.

🤖 Humanoids - Yay or Nay?

Let’s start with the most discussed and ‘controversial’ question: Are humanoids investable? To make it short, from the perspective of a £50M pre-seed fund, no it makes no sense at all. From the perspective of a £5bn multi-stage fund, probably, if you really believe in it and that the timing is right?

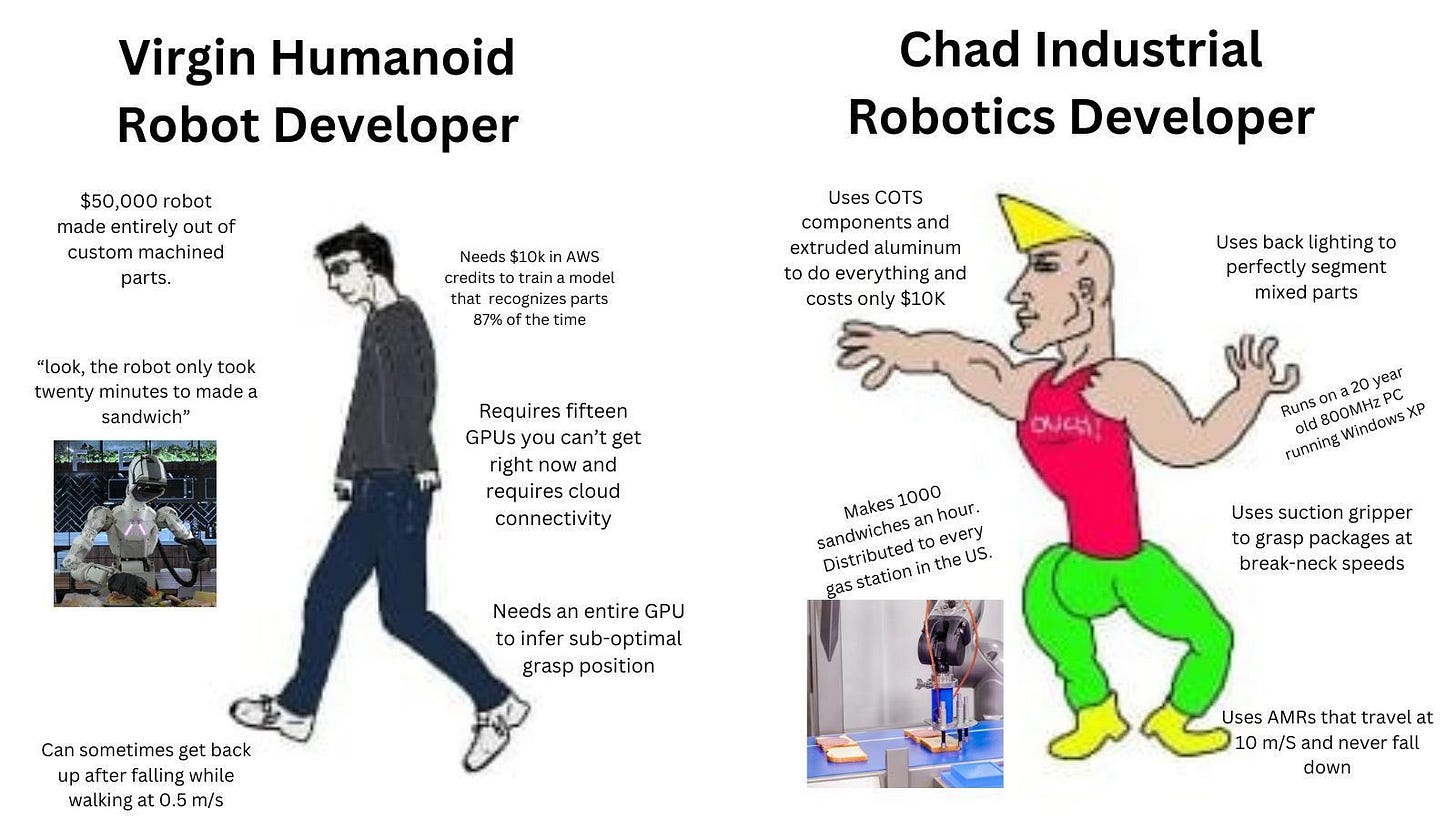

My (short) anti-thesis is the following: Humanoids are at the forefront of the data problem. There simply is none (or nearly none), because - as with any technology - we started deploying very specialized and verticalized robots and tried to tackle all the things humans are NOT made for. Thus, these areas are the ones, that will see generalization impact first and as a result, a widening of application areas. Yes, the world is (at least now) made for humans to a large degree, but also only for tasks that are not scalable, not dangerous or unpleasant (yes, doing laundry is unpleasant, but not as unpleasant as sorting industrial waste). The tasks that are left, are not really commercial and I don’t think most people want to add a cost of robot-laundry crisis to their cost of living crisis. The only task that comes to mind is military deployment (as an offensive technology) and thus, less commercially motivated. Don’t get me wrong, I fully subscribe to a future that involves humanoids, but I think the timing is way off. I like science-fiction like the next guy, but I still look for commercial outcomes. To conclude my anti-thesis I want to throw in this high-quality twitter meme:

🏭 Vertical robotics and vertical integration

This might not come as a surprise, but I still believe that highly specialized robots that can unlock industries are an attractive opportunity - just like Ascento in our portfolio! With that, I don’t mean the 100th version of industrial robots and manufacturing applications, but rather very - on a first look - odd markets, where the data hedge will still be very much proprietary and longterm defensible. The value here will lie in orchestrating hardware in unique settings.

What I’m more excited about though, are vertically integrated plays, that rethink how processes are executed and automated, using clever combinations of automation technology and robotics. A good example would be wet lab automation - like Automata. Given the trend of reshoring whole industries, I believe we sit at a once-in-a-lifetime opportunity to completely restructure industries with the knowledge gained on the previous iterations, leapfrogging productivity. A very good example where similar mechanisms were at play is FinTech in emerging markets. Given that there is no incumbent banking infrastructure in place, FinTechs startups could completely rethink how finance is done on a larger scale. Sectors I’m particularly excited about in this context are semiconductor manufacturing (→ the new TSMC fab in Arizona surpasses productivity in Taiwan), battery production or anything that highly benefits from an autonomous and decentralized production. The big question for me though is, is this viable for a small pre-seed fund? These approaches will need quite a good chunk of money to get started and unless a move to debt-financing is possible, presumably too dilutive.

👨🏻💻 Software and data infrastructure

Simply thinking from an investor perspective, building the general-purpose ‘robot-brain’ is very interesting, given the distribution play it brings. Imagine building, and commercializing a software, that could work for the majority of robot automation with some simple fine-tuning on top. The large multi-stage funds are already betting, that this might work out with companies like Skild and Physical Intelligence. So is there a thesis? From a £50M pre-seed fund perspective definitely not. The software layer of robotics - either model or data wise - improves with size: bigger model and more data leads to better outcomes. Both of these parts of the equation are to a large degree a function of the amount of compute and compute simply comes down to money spent. There is no way of outcompeting “throwing $200M at the data and model problem” with “throwing “£2M at the data and model problem”. Are there alternative pure software or data plays? I don’t really think so, sadly. Maybe in very specialized problem spaces with peculiar underlying data or software orchestration, which is, in its essence, vertical robotics. Think of niche vision models with narrow use-cases and specialized hardware platforms, or some clever data infrastructure. Most of the other arising opportunities I fear, will fall into the multi-stage fund category, just throwing more money at a problem that scales well with more money.

🦾 Robot hardware

In general, I would stay away from any pure hardware play at the moment. Robot hardware in its standard configurations is commodity at this point and it will be hard to outcompete. The true value creation lies within the layers beneath, orchestrating the hardware (at least for now). There are interesting emerging areas in hardware though, like soft robotics that are broadly interesting, as they can improve dexterity. Application areas are, for example, surgery and collaborative settings with humans. I don’t think opportunities in these areas are ready for venture though, nor target venture scale markets in their current iterations.

The bottom line: robotics is tough to invest in, but worth monitoring!

🏁 Concluding Remarks

For those of you interested in delving deeper into robotics, check out the following articles and good reads, which have been invaluable to writing this blog:

And that brings us to the end of the 7th episode of Deep Tech Demystified. I hope you enjoyed this deep-dive into robotics! If you don’t want to miss the next deep tech content drop, click below:

If you are a founder or a scientist that is building in the field of robotics, please reach out on Linkedin or via mail (felix@playfair.vc), I would love to have a chat! Cheers and until next time!