Deep Tech Dispatch | #17

Visual microphones | Nextgen compute | Microrobots delivering drugs | Antimatter qubits | Scout helicopters on Mars | Robots with fear and much more

🙋🏻♂️ A Short Preface

Sup nerds. After my (non DeepTech-related) rant two weeks ago about the unfortunate state of venture, we’re officially back on the path of Deep Tech enlightenment. Also sorry for the slight break in publishing, I’ll be back on a regular upload schedule in September!

This week’s dispatch contains the follow:

🎤 Visual microphones from Beijing

💻 Nextgen compute chips from indium selenide?

🤖 Microrobots delivering drugs directly to cancer targets

⚛️ Antimatter qubits at CERN

💡 A new path to quantum machine learning from Los Alamos

🚁 NASA and AeroVironment to deploy scout helicopters on Mars

🪂 Supersonic parachute deliveries to Mars from NASA

🧠 Two billion neuron neuromorphic computer from Zhejiang

🦾 China’s Unitree humanoid debuts for $6000

🌀 The ‘productivity paradox’ of AI adoption in manufacturing firms

😱 Robots That Learn To Fear Like Humans Survive Better

Reading time: 15 minutes.

🧑🏻🔬 Science & Research News

The visual microphone from Beijing

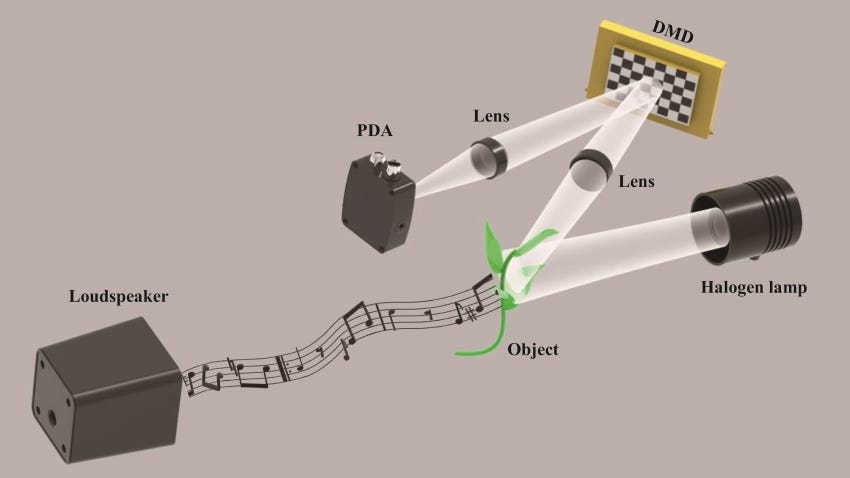

Researchers from the Beijing Institute of Technology have developed a low-cost "visual microphone" that can reconstruct audio by analysing sound-induced vibrations of everyday objects captured by a standard digital camera. This light-based listening technology effectively turns almost any object - a bag of chips, a potted plant, a crisp packet - into a functional microphone without ever needing to place a physical device in the room.

On the one hand, it opens up new possibilities for remote sensing, allowing for everything from infrastructure monitoring (listening to the vibrations of a bridge) to non-invasive biological monitoring. On the other hand, it raises significant privacy and security concerns, as it demonstrates that conversations can potentially be eavesdropped on from a distance by simply pointing a camera at an object in the target's room. Welcome to the dual-use nature of Deep Tech.

All of this is made possible by the following principle: sound waves create air pressure, making objects they hit move/vibrate. With a sensitive enough high-speed camera, the pixel-level distortions of an object can be captured. Putting an algorithm on top that while filtering out environmental noise, like movements induced by air turbulence, you can reconstruct the clean vibrational signal from sound signals and then convert it back into audio.

The team from Beijing successfully demonstrated that their system could reconstruct intelligible audio from a variety of objects, even using a standard consumer camera. This is very interesting and scary at the same time - let’s hope this technology finds productive use cases. You can find the news release here and the paper here.

Nextgen compute chips from indium selenide?

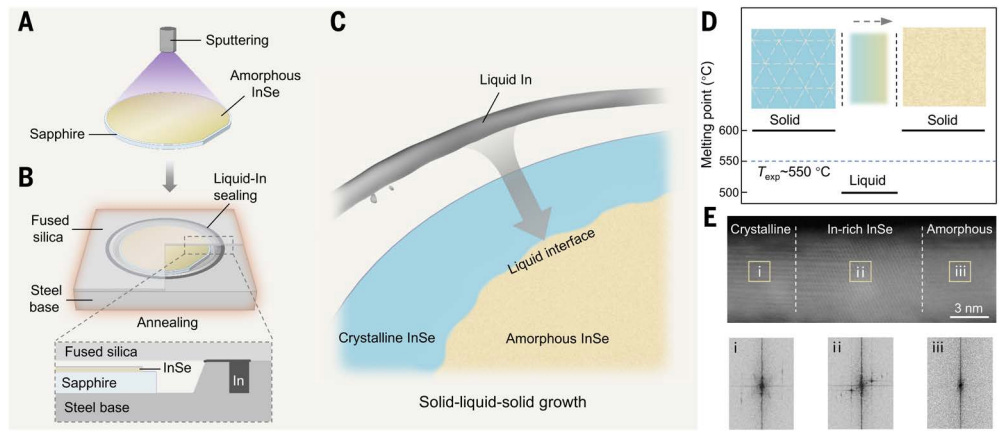

Researchers from Peking University have successfully created the world's first wafer-scale two-dimensional (2D) indium selenide (InSe) semiconductors. InSe has been an interesting contender for novel semiconductors for a while, mainly because the material can move electrons a lot more efficient than silicon. At scale this would mean lower power consumption and thus higher performance per “chip”. The challenge has always been though to produce wafers out of InSe, as the material is layered in atom-thick 2D layers, just like graphene. A wafer needs to be composed of multiple layers on top of each other, while the connecting forces between the layers are very weak.

The breakthrough made at Peking University overcomes exactly his manufacturing challenge and the researchers have for a first time produced a wafer out of InSe. I’ll give you a rough overview how it works below but I’ll spare you the details, because this process goes very deep (I don’t really understand it is what I mean with that): You take a pile of messy InSe on top of a sapphire wafer. Than you put a layer of solid indium on top of that creating a sandwich. You heat the solid indium until it is liquid, which then sorts out the bottom of messy InSe and… then you have an InSe wafer.

The research also built some large-scale transistors on these wafers that demonstrated the promised ultra-high electron mobility. The resulting performance surpassed the international roadmap projections for what silicon will be able to achieve by 2037. Productising this process, might have big impact in the future. You can find more details in the article here.

Microrobots delivering drugs directly to cancer targets

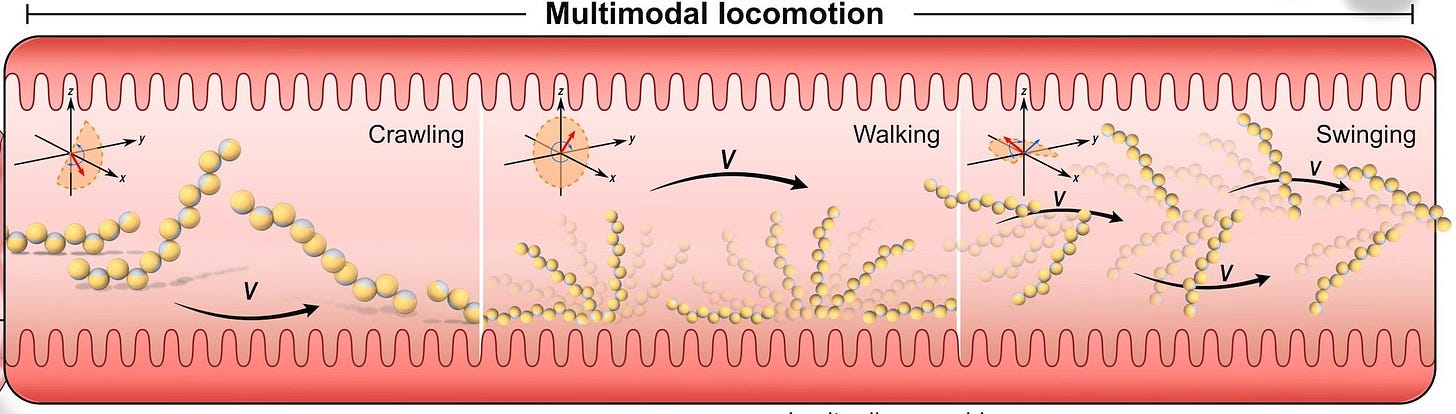

Researchers from the University of Michigan and University of Oxford have developed magnetically steerable, snail-shaped microrobots that can swim through the lungs to deliver cancer-fighting drugs directly to tumors. This solves a major challenge in drug delivery and could dramatically improve outcomes for lung cancer patients while reducing the harsh side effects of chemotherapy.

Getting drugs to the deep, branching airways of the lungs is notoriously difficult; less than 1% of intravenously administered chemotherapy actually reaches its target. This means that 99% of the chemotherapy wreaks havoc on your healthy body. On the contrary, the microrobots act like a microscopic guided missile, concentrating the medication precisely where it's needed.

The robot snail is made of a chain small 3D printed polymer microrobots, each coated with a special nanoparticle surface made of iron oxides. This coating is key to the robot and makes it magnetic, while avoiding any interaction with lung cells themselves. The shape of the snail is optimised for movement through the complex environment of the lung using external magnetic fields. Once it reaches the target, the robot can release its concentrated dose of drugs to combat the tumour.

In tests using mice, the microrobot delivery method was 16 times more effective than standard intravenous chemotherapy at shrinking tumors. I really hope humanity will look back to chemotherapy in a few decades as a distant primitive tool of the past. You can find more details in the news articles here, and the full paper here.

Antimatter qubits at CERN

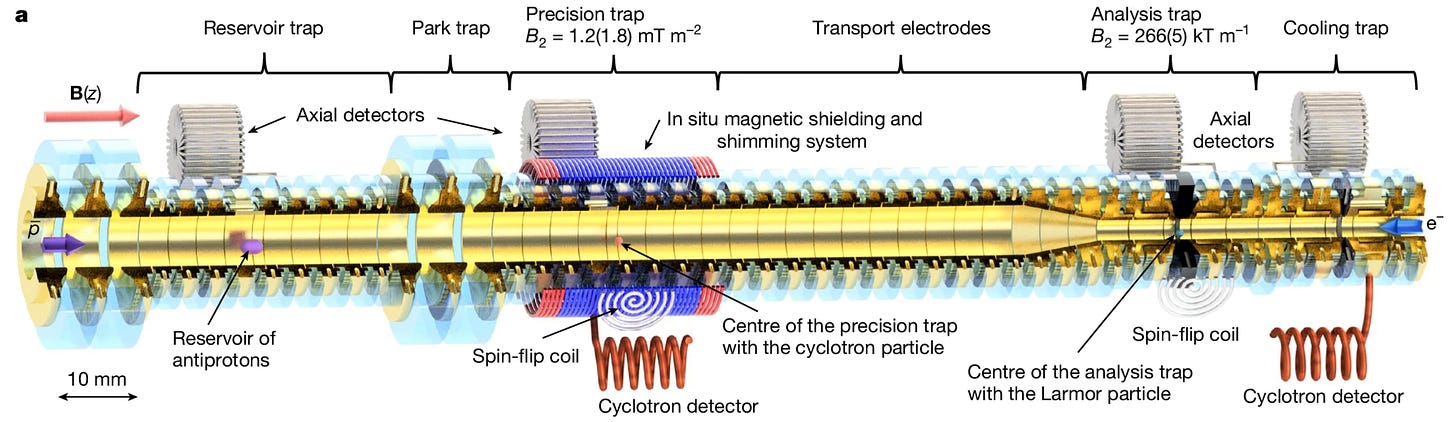

Researchers from CERN have demonstrated as a first the existence of an antimatter quantum bit, or qubit out of an antiproton - the antimatter part of a proton. So we now have another form of qubits that researchers can work with. But this form of qubit is not intended for quantum computers, but purely to prove or disprove one of the biggest and fundamental mysteries of physics:

Why is the universe made almost entirely of matter, when the Big Bang should have created equal amounts of matter and antimatter? The Standard Model of particle physics predicts that matter and its antimatter counterpart should be perfect mirror images. If scientists can find even a tiny crack in this symmetry - any difference in how a proton and an antiproton behave - it could finally explain the cosmic imbalance and rewrite our understanding of the universe.

The device to test this on antimatter is pictured below. It works by essentially trapping an antihydrogen atom (one antiproton and one positron) with strong magnetic fields, cooling the antihydrogen down to near absolute zero with a laser and then forcing the positron to ‘“jump” from its lowest energy level to a higher one.

This transition of the positron to a higher level could be measured within this experiment and is exactly the same as it would occur in a normal hydrogen atom. So no differences between antimatter and matter in this instance. No real world applications for this research, but the good news is the world is saved and physics continues as usual. Wonderful. You can find the article here and the paper here.

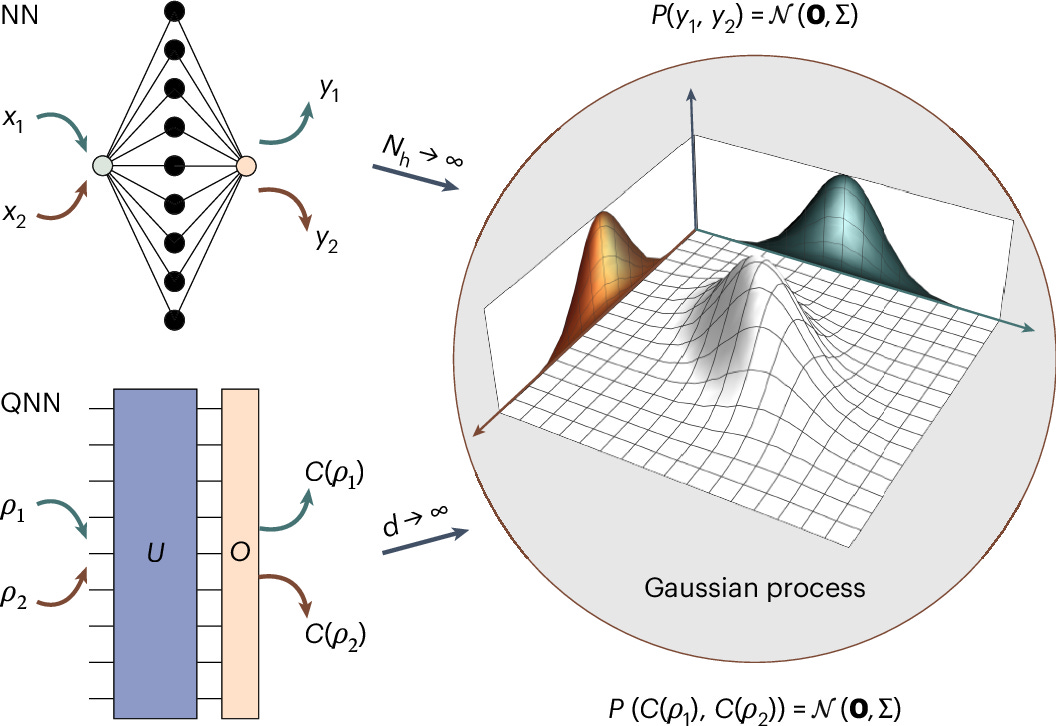

A new path to quantum machine learning from Los Alamos

Why isn’t quantum machine learning a big thing yet? Well, for one obviously quantum is still not there (yet), but for another researchers struggle to transfer concepts from machine learning (and neural networks specifically) into the quantum realm.

One of the resulting issues of translating algorithms into quantum is the “barren plateau” phenomenon. Scientists would design a quantum algorithm, only to find that as the problem (and the number of qubits) got bigger, the algorithm would hit a "barren plateau" - a vast, flat landscape in the mathematical space where it couldn't find any useful gradients to learn from. So if the algorithm is looking for optima - i.e. local and global valleys representing an optimal solution it can move towards - it simply couldn’t find any. As it turns out, simply copying concepts from classical neural networks to quantum algorithms doesn’t work.

“The issue with quantum neural networks is that we were copying and pasting classical neural networks and putting them in a quantum computer,” said Martin Larocca, a Lab scientist who specializes in quantum algorithms and quantum machine learning. “This appears to not work as easily as one could have hoped. Hence, we wanted to go back to basics, and find simpler, more restricted ways of learning, but which could actually work and also have certain guarantees.”

Researchers from Los Alamos have now resolved this roadblock for quantum machine learning by introducing Gaussian processes as the learning functions for quantum neural networks. Gaussian processes themselves are widespread in forecasting. Usually there is an assumption that a distribution follows a simple bell curve, a Gaussian. With new observations of something you want to predict you can update this bell curve and find a better distribution. This gradual and constrained learning process can also - as it turns out - be applied to quantum neural networks, making them finally learn. You can find the article here and the paper here.

🔬 Technology Frontiers

NASA and AeroVironment to deploy scout helicopters on Mars

AeroVironment and NASA's Jet Propulsion Laboratory (JPL) have unveiled the "Skyfall" mission concept. They plan to drop a swarm of six autonomous scout helicopters onto Mars. The ideas is to make Mars exploration faster, cheaper, and more resilient. The success of the Ingenuity helicopter proved that flying on Mars is possible. Skyfall scales that idea up, building an entire mission around a fleet of its descendants. Their primary job would be to find and certify the safest, most resource-rich landing sites - especially those with water ice - for the first human missions to the Red Planet. You can find the article here.

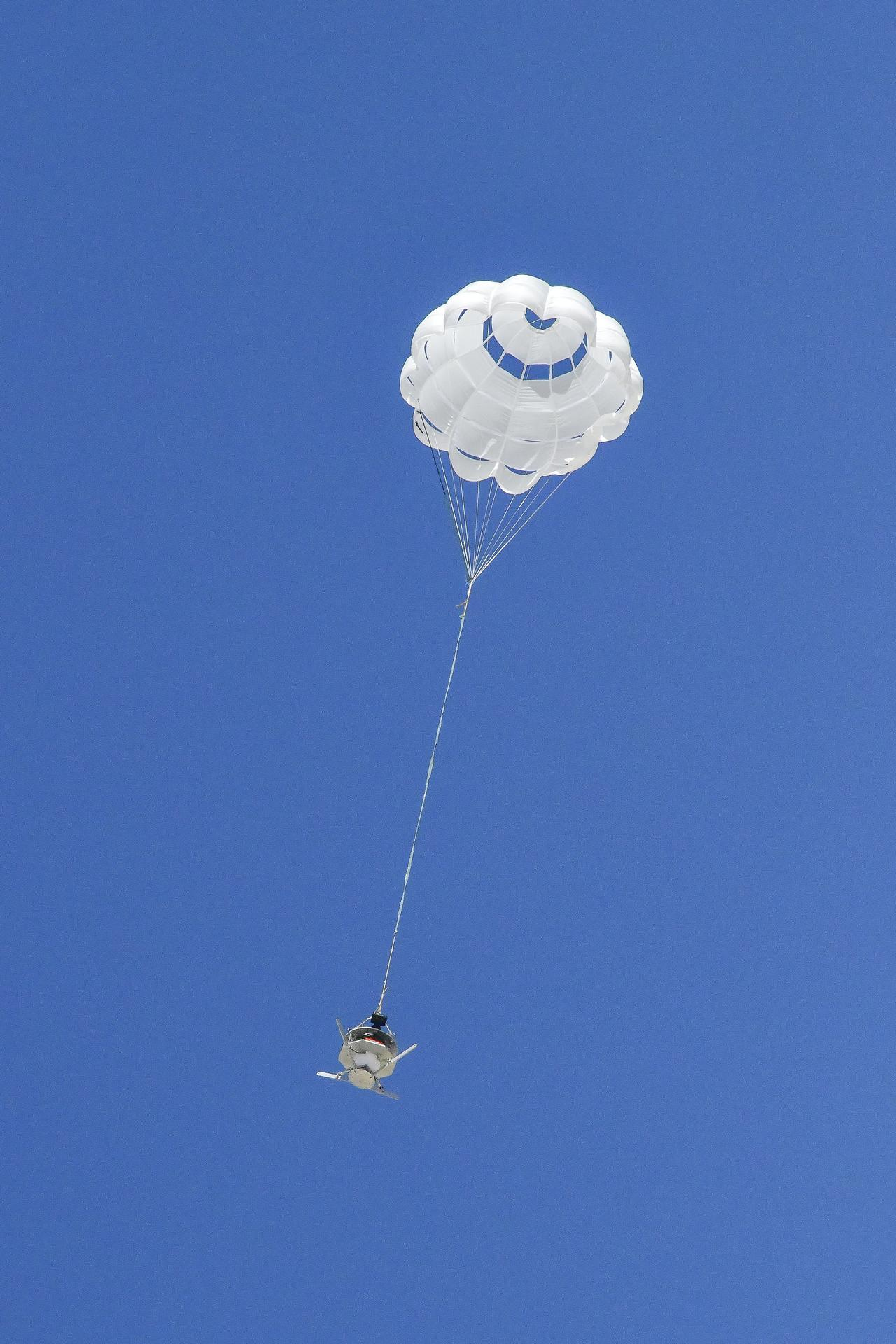

Supersonic parachute deliveries to Mars from NASA

Speaking of NASA and Mars, NASA is currently working on new parachute systems that enables them to land heavier payloads at the Red Planet. Landing these heavy payloads on Mars is incredibly difficult, and the parachute is a critical point of failure, because the parachutes get deployed at supersonic speeds in the thin Martian atmosphere. When the Perseverance rover landed, its parachute experienced 37,000 kg (over 80,000 pounds) of force. To land even bigger payloads for future human missions, we need stronger, more reliable parachutes.

The problem is that our computer models for predicting these forces are incomplete. NASA developed now a new sensor system that is designed to gather the real-world data needed to build better models and, ultimately, better parachutes. In a recent test, engineers successfully demonstrated this system simulating a high-speed drop off. The data will be used to validate and improve NASA's simulations, making future supersonic parachute designs for Mars missions safer and more reliable. You can find the article here.

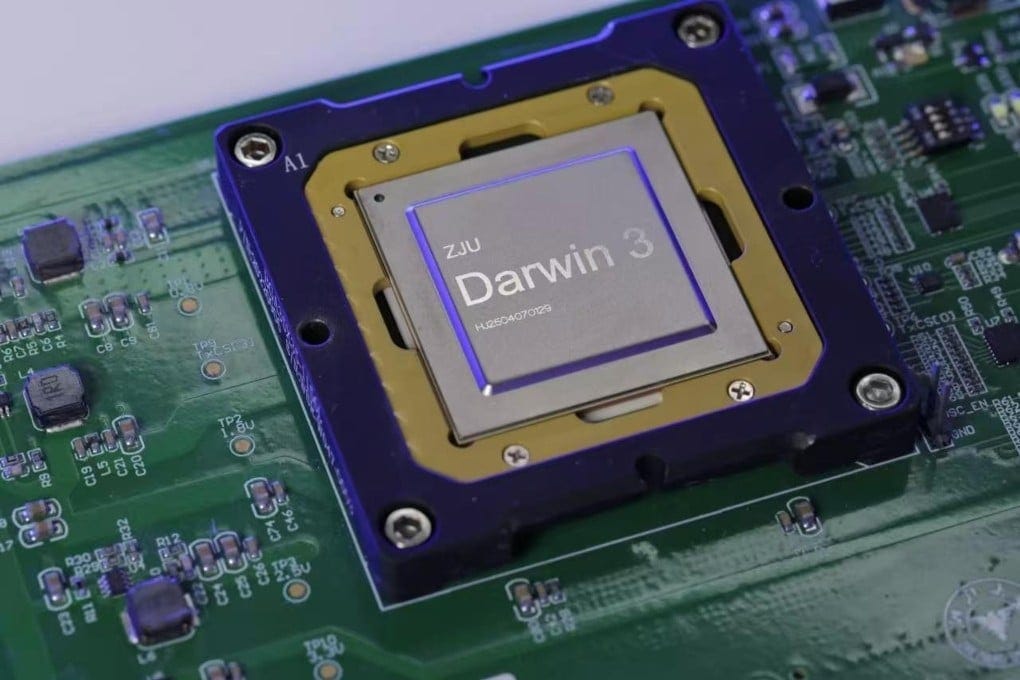

Two billion neuron neuromorphic computer from Zhejiang

Researchers from Zhejiang University have unveiled their 2 billion neuron-strong neuromorphic computer called “Darwin Monkey”. The architecture itself mimics the workings of a macaque monkey’s brain with the promise of low-power applications in AI and consumes . If neuromorphics (brain-inspired computing) interest you, I’ll link you my explanatory article here. The Darwin Monkey has been successfully deployed by the researchers to complete tasks like content generation, logical reasoning and mathematics, using DeepSeek models. With its 2 billion neurons it dethrones the previous market leader from Intel, unveiled in 2024. You can find the article here.

China’s Unitree humanoid debuts for $6000

China’s Unitree now offers a humanoid for just below $6000. I’ll leave a comment from Benedict Evans recent newsletter here, that sums up my opinion:

We’ve clearly reached some kind of turning point in limbs and robotics: China’s Unitree is now selling a $6000 humanoid device that can turn cartwheels. This comes partly from AI and partly from better batteries and motors. However, we should remember Moravec's paradox, and that in general, what’s hard for people is easy for machines and vice versa: backflips are a lot easier for a machine than making a cup of coffee, let alone finding the coffee in a strange kitchen. Making your robot with legs instead of wheels doesn’t mean it’s any more intelligent than a Roomba, so where is that useful? After all, if you want a robot to do your laundry, that’s a washing machine.

💡 Articles & Ideas

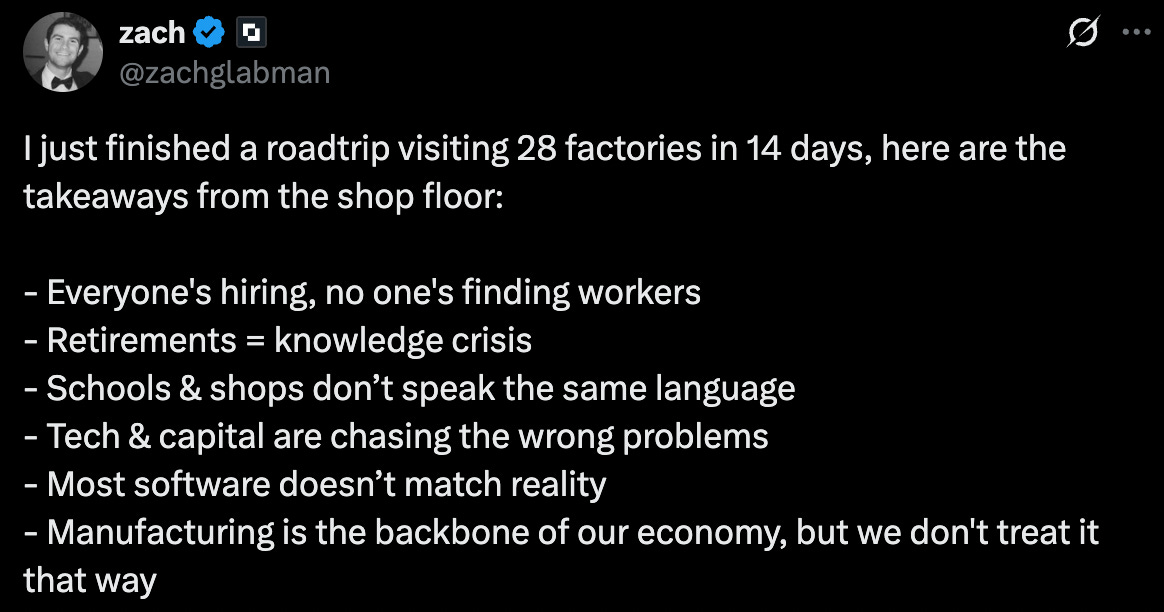

The ‘productivity paradox’ of AI adoption in manufacturing firms

Albeit huge investments into AI across the board and seemingly high diffusion of AI usage, there still are doubts around true productivity growth. Researchers from MIT Sloan now investigated this seeming paradox in the manufacturing context to find an answer as to why this is the case. What they’ve found is actually quite intuitive:

Adopting AI in manufacturing initially causes a decrease in productivity, a phenomenon described as the "J-curve" trajectory where short-term losses precede long-term gains. This temporary dip is more pronounced in older, more established firms due to their rigid structures and legacy systems, which struggle to adapt to new technologies. The conclusion is that AI is not a simple plug-and-play solution but requires significant systemic changes, including investments in data infrastructure, staff training, and workflow redesign. However they also find that, firms that successfully navigate these adjustment costs eventually experience stronger growth in output, revenue, and employment, with digitally mature and larger companies seeing the most significant benefits in the long run.

While the research was done in the context of manufacturing firms, I think it might be also applicable to other sectors. If this assumption is true, it seems like AI - after digitalisation - might be killing off all change-resistant organisations, but also that the main benefits of AI will be concentrating around Big Tech? You can find the article here.

🤔 Odd Read of the Week

Robots That Learn To Fear Like Humans Survive Better

Episode 261 of robots becoming more human. This time researchers could prove that if they program robots to develop a "fear response", it improves the robot’s risk assessment and survival in dynamic environments. While machines having a “fear response” sounds a bit odd, it essentially implements a simple form of emotional, quick reaction to unfamiliar stimuli - like freezing or ducking when we hear a loud sound - mimicking how the human brain induces reflexes.

According to a theory on how our brains work, called the dual-pathway hypothesis, this reaction is elicited by the “low road,” neural circuitry responsible for emotions, driven by the amygdala. But when our brains instead use experience and more articulated reasoning involving our prefrontal cortex, this is the second, “high road” pathway to respond to stimuli.

Rizzo and a doctoral candidate in his lab, Andrea Usai, were curious to see how these two different approaches for confronting risky situations would play out in robots that have to navigate unfamiliar environments. They began by designing a control system for robots that emulates a fear response via the low road.

By implementing a dedicated reinforced learning control system that simulates this “low road”, robots can make safer decisions when encountering dangerous obstacles - because they react like a fearful human would to something unfamiliar.

For example, in one of the scenarios with hazards dynamically moving around, the low-road robot navigated around dangerous objects with a wider berth of about 3.1 meters, whereas the other two conventional robots tested in this study came within a harrowing 0.3 and 0.8 meters of dangerous objects.

Usai says there are many different scenarios where this low-road approach to robotics could be useful, including cases of object manipulation, surveillance, and rescue operations, where robots must deal with hazardous conditions and may need to adopt more cautious behavior.

You can find the article here and the paper here.

📚 Further Goodreads

Why is it so hard for startups to compete with Cadence?

And when chip designers working in modern process nodes spend millions of dollars on mask sets and manufacturing runs, they are very unlikely to take a risk on a tool that isn’t officially certified and supported by TSMC.

TSMC is a huge company, without a major incentive to work with startups to certify their tools. When people ask me about competing with Cadence, my tongue-in-cheek response would be that the best first step would be to marry one of the daughters of Morris Chang, TSMC’s legendary founder. It’s a joke, but it rings a bit true -- to make waves in the EDA industry, you need your tools to get certified, and for that, you need to somehow curry favor with TSMC.

I just finished a roadtrip visiting 28 factories in 14 days

Diverging Models of AI Development, and bifurcation in global AI trajectories: The U.S. centers on abstract, cloud-based intelligence (e.g., LLMs), while China develops AI tightly integrated with physical systems and infrastructure. This divergence has implications for technological standards, ethics, and the global diffusion of AI technologies.

Infrastructure as an Advantage/Differentiator: China's infrastructural focus enables deployment of AI in real-world contexts at scale, especially in mobility, urban governance/management, and robotics. This presents a comparative advantage not often captured in typical U.S.-China tech rivalry narratives.

Kimi K2 and when “DeepSeek Moments” become normal

We need leaders at the closed AI laboratories in the U.S. to rethink some of the long-term dynamics they're battling with R&D adoption. We need to mobilize funding for great, open science projects in the U.S. and Europe. Until then, this is what losing looks like if you want The West to be the long-term foundation of AI research and development. Kimi K2 has shown us that one "DeepSeek Moment" wasn't enough for us to make the changes we need, and hopefully we don't need a third.

Ultimately, it is how you use AI, rather than use of AI at all, that determines whether it helps or hurts your brain when learning. Moving away from asking the AI to help you with homework to helping you learn as a tutor is a useful step. Unfortunately, the default version of most AI models wants to give you the answer, rather than tutor you on a topic, so you might want to use a specialized prompt. While no one has developed the perfect tutor prompt, we have one that has been used in some education studies, and which may be useful to you and you can find more in the Wharton Generative AI Lab prompt library.

NASA races to put nuclear reactor on Moon and Mars

The reactor directive orders the agency to solicit industry proposals for a 100 kilowatt nuclear reactor to launch by 2030, a key consideration for astronauts’ return to the lunar surface. NASA previously funded research into a 40 kilowatt reactor for use on the moon, with plans to have a reactor ready for launch by the early 2030s.

The first country to have a reactor could “declare a keep-out zone which would significantly inhibit the United States,” the directive states, a sign of the agency’s concern about a joint project China and Russia have launched.

When you really interrogate what technology in manufacturing actually is, of course, you can say part of it is about patents, about things that can be written down, about hard knowledge in manufacturing.

But there's actually another huge part that's tacit knowledge.3 How do you manufacture an iPhone? You need many skilled workers going through many complete assembly lines to put this iPhone together. It's not like you can write the iPhone assembly steps in a piece of paper, and then have new American workers read that paper and immediately go into the factory and start working.

If America needs to reindustrialize, you might really still need to bring Chinese workers back to continue training American workers before you'd have a relatively effective production process. Is America's imagination about reindustrializing very arrogant? Massively ignoring this kind of tacit knowledge in manufacturing and China's accumulated experienced workforce.

Customs tariffs: The world is perceived as a combat arena and no longer as a space for cooperation

This policy is a complete break with the internationalist vision that prevailed from the presidency of Franklin D. Roosevelt to that of Barack Obama. It is now being replaced by a geo-economic and “competitiveness” vision of the world. If, ultimately, the aim is to refocus the global economy on the United States, this vision already exists; it is the one that China is pursuing, with the aim of dislodging the United States from its still hegemonic position. But does Donald Trump really have the means to realise his ambitions? Can he apply the same methods as China without jeopardising the economic stability of his own country?

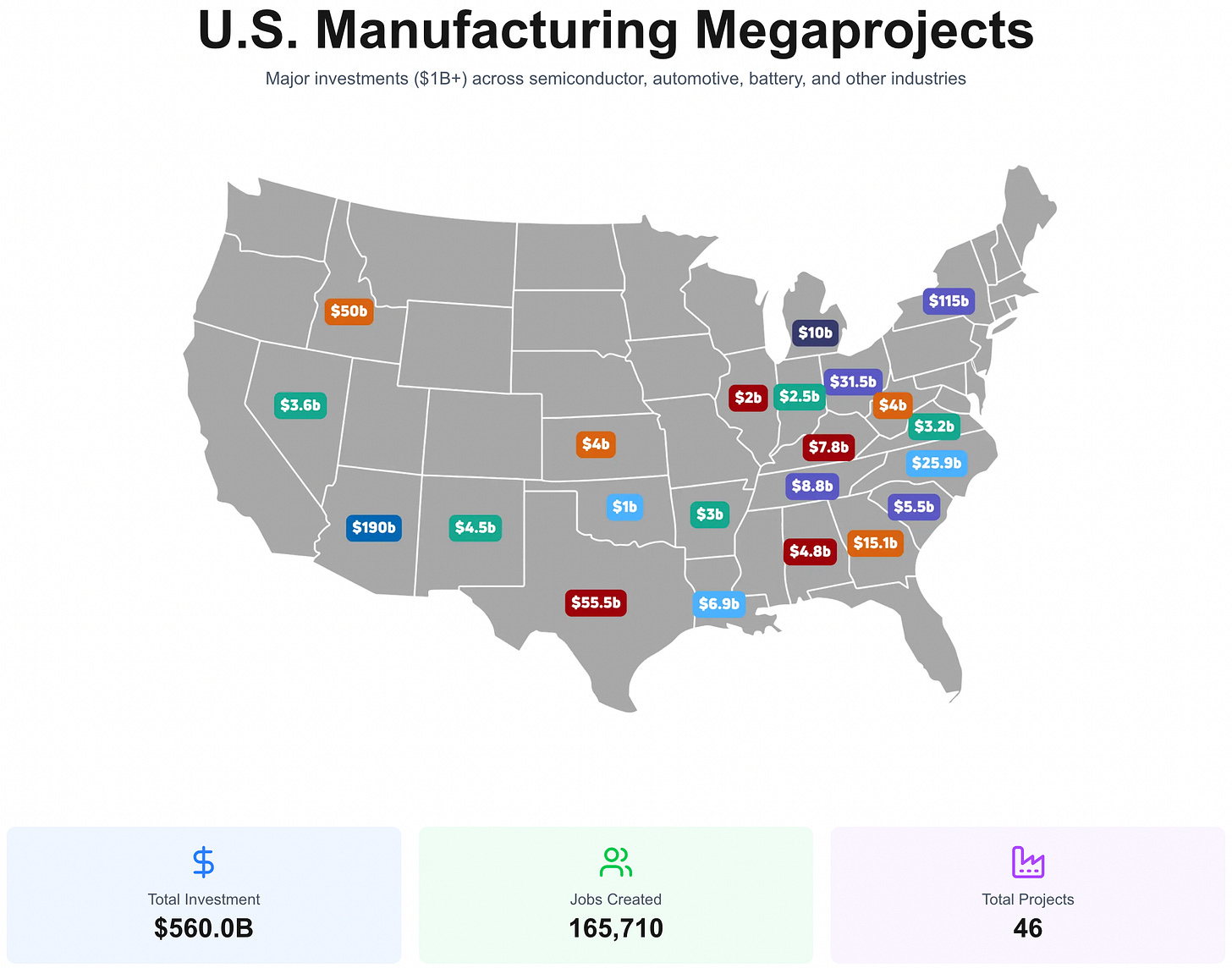

U.S. Manufacturing Megaprojects