The State of Neuromorphic Computing

Promises of efficient, brain-inspired compute architectures

👋 Hi there! My name is Felix and welcome to Deep Tech Demystified! Once a month I will publish topical overviews or deep-dives about Deep Tech topics that excite me. I will try to make the writings comprehensible and accessible for people from all backgrounds, doesn’t matter if it’s business, engineering or something completely else.

🧠 What is Neuromorphic Computing?

Neuromorphic computing draws inspiration from the structure and function of the human brain, comparable to artificial neural networks (ANNs), but it is implemented directly in hardware. Its primary objective is to emulate the brain's capacity for energy efficient and parallel information processing. Unlike traditional von-Neumann architectures, neuromorphic architectures integrate memory and processing capabilities within individual "neurons", enabling in-memory computation. This approach counters the memory bottleneck in von-Neumann architectures, where data must be shuttled back and forth between memory and processing units, leading to inefficiencies regarding energy consumption and computation speed.

If this all sounds like gibberish to you, take a look at my first article in which I give a broad overview of the current state and limitations of compute, like the von-Neumann bottleneck!

The practical implementation of brain-inspired computing is accomplished using specialized hardware and algorithms tailored to this in-memory computation paradigm. In general, neuromorphic hardware can be realized as analog, digital, and mixed-mode (analog/digital) design. While digital implementations are more flexible (e.g. via FPGAs), analog implementations promise exceptionally low power consumption on small footprints, making them very suitable for applications where today’s digital circuits crumble. Thus I will focus on the analog implementation. If the technical aspects do not interest you feel free to jump to the Application Relevance chapter.

💾 Neuromorphic Hardware

Memristive devices, so called memristors (i.e. ”memory-resistors”) are promising candidates for efficient hardware implementations of neuromorphics, emulating the synaptic plasticity observed in biological synapses. Synaptic plasticity can be defined as “the activity-dependent modification of the strength or efficacy of synaptic transmission at preexisting synapses”, i.e. the ability of the brain to store memories and adjust to new experiences.

A memristor is an electronic component whose electrical resistance is changeable and therefore adjustable. Its resistance depends on the past voltage applied to it or the amount of electrical charge that has passed through it. If the voltage supply or current flow is interrupted, the currently set resistance value is maintained without further energy supply. Thus it “memorises” its resistance. This fundamental principle differentiates memristors from traditional forms of memory where data is stored as an electric charge, which implies that data cannot be stored without power supply. Memristors were originally developed as non-volatile memory approach to improve performance for storage, but they have gained attention for their favorable characteristics, which have the potential to enable novel computing platforms in analog and neuromorphic computing.

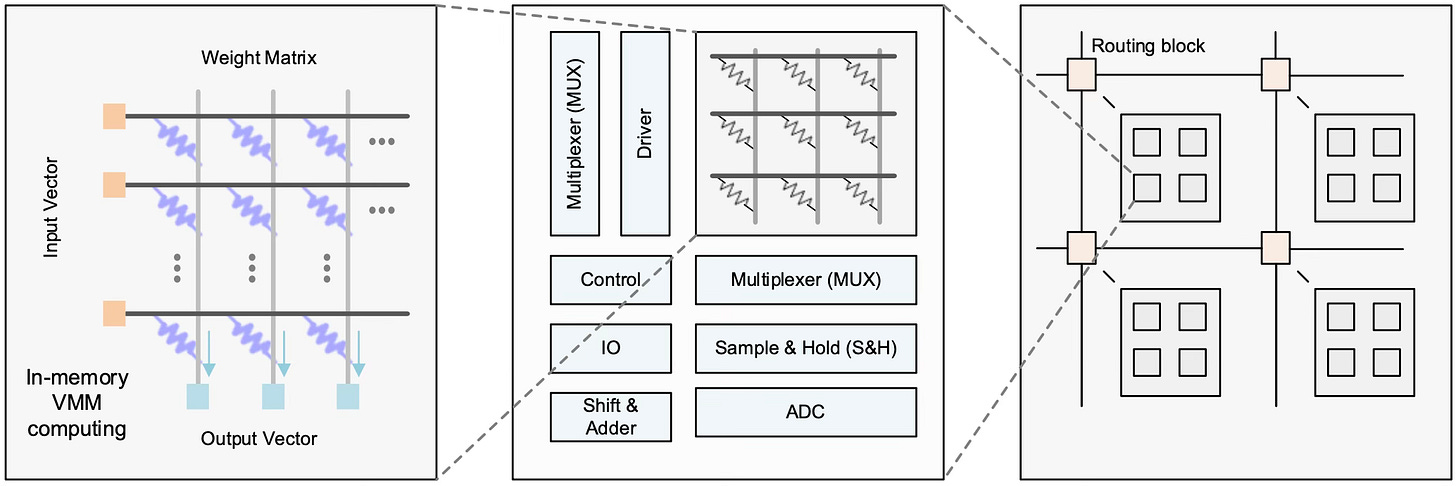

To make use of memristors for neuromorphic computing, memristors are arranged in a crossbar array. This array is a grid-like structure, where each memristor sits at the intersection (i.e. the crossbar) of two perpendicular axis. Compared to a typical ANN known from the domain of deep learning, a memristor and its respective adjustable resistance would reflect a node and its synaptic weight. Due to the adjustability, the memristor can also be modified by a learning rule, just like an ANN. The in-memory computation then takes place as a simple vector-matrix multiplication (VMM), with input vectors (the input data) that are encoded for example as voltage amplitude. Taking advantage of Ohm’s Law (voltage = current * resistance) and Kirchhoff’s Law it is possible to retrieve the computation results through the output vector. Thus, VMM (i.e. computation) is performed within one step without the inefficient data movements present in von-Neumann architectures, resulting in orders of magnitude higher computational throughput. The following figure illustrates this schematic, where the weight matrix represents the crossbar of memristors with their respective resistances (i.e. their synaptic weights).

Concluding, it should come to no surprise, that the translation of ANNs on the hardware level has the potential to vastly outperform conventional von-Neumann architectures emulating ANNs on the software level.

Good reads:

👨🏾💻 Neuromorphic Algorithms

🌵 Spiking Neural Networks

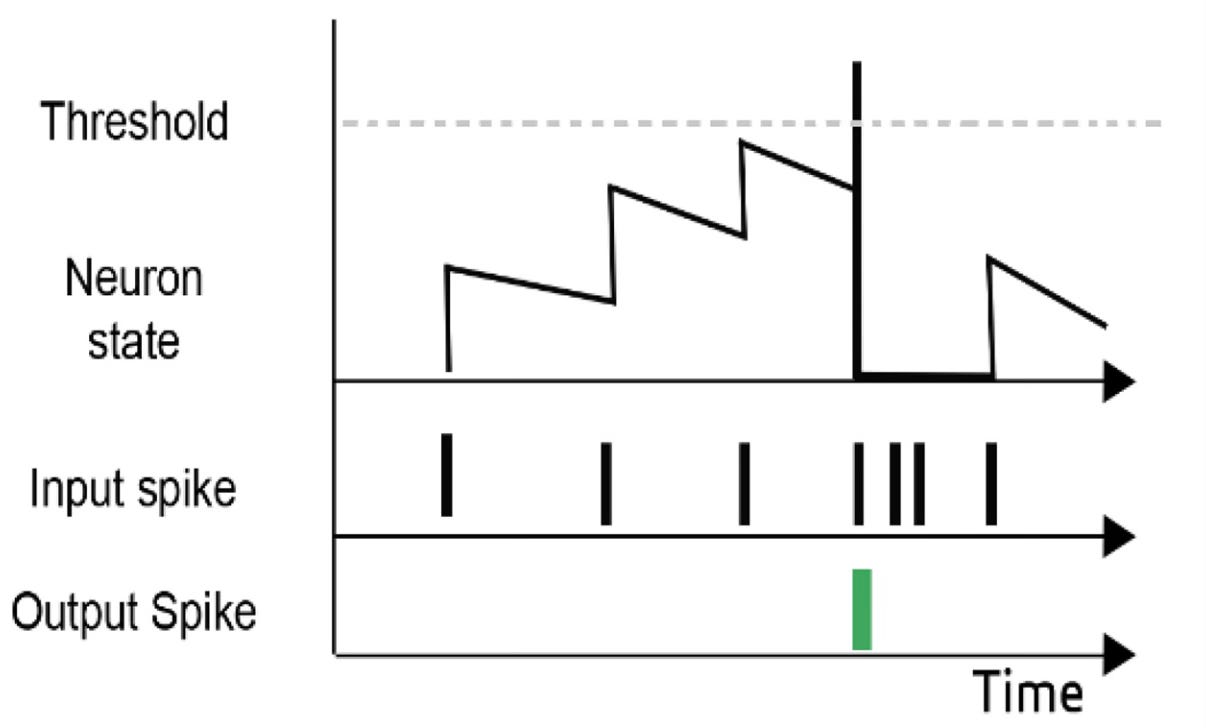

So far I always used ANNs as underlying algorithm to explain the concept and promises of neuromorphic architectures. ANNs are probably the best known approach to artificial intelligence (AI) and have performed well for a broad spectrum of tasks, for example in computer vision or control theory. But ANNs do not necessarily adhere to all neural processing mechanisms of the brain and are rather designed to be easy to train and implement on von-Neumann architectures. For example, neurons of ANNs are represented by activation functions and synaptic weights that continuously (i.e. for every input) calculate a resulting output. From a biological standpoint though, neurons only “fire”, and thus produce spikes, at certain events. This biological mechanism is not reflected in ANNs but in another form of neural networks, the so called spiking neural networks (SNNs), also referred as the third generation of neural networks. There are different types of neuronal models for SNNs that have emerged and mainly differ regarding their reflection of biological characteristics and computational complexity. For the curious and mathematically gifted readers take a look at this paper, it gives a great overview of the most popular approaches and their mathematical formulation. The important part across all models is though, that those neuronal models give SNNs their event-driven processing nature, which makes SNNs more energy efficient and computationally powerful than ANNs and particularly suitable for real-time processing and inference tasks. These neurons function by accumulating input signals over time. When the neuron’s potential reaches a certain threshold, it generates an output spike, which is then transmitted to other neurons as input. The timing of these spikes carries information, with different patterns of spike-timing encoding different features of the input data. The following figure illustrates the SNN neuron’s activation-behaviour, where it will “fire” once the threshold is reached and thus activate only when an event occurs.

Good reads:

Networks of spiking neurons: The third generation of neural network models

Advancements in Algorithms and Neuromorphic Hardware for Spiking Neural Networks

Opportunities for neuromorphic computing algorithms and applications

💡 Spike-Timing-Dependent Plasticity

Now the difficult part begins. Learning in a SNN is very challenging in comparison to an ANN. While the spiking event-driven processing nature makes computation very efficient, it introduces non-differentiability into the learning process. The algorithm used to teach ANNs what to do is called backpropagation, usually implemented in conjunction with gradient descent optimisation methods, to adjust the synaptic weights of the connections in the network in order to minimise the error between the actual output and the desired output. If the functions are not differentiable (like in an SSN) it is impossible to calculate the changes occurring while making adjustments to the network in order to minimise errors. The solution to this dilemma can be found by closer looking into - drum-roll please - biology, i.e. the brain.

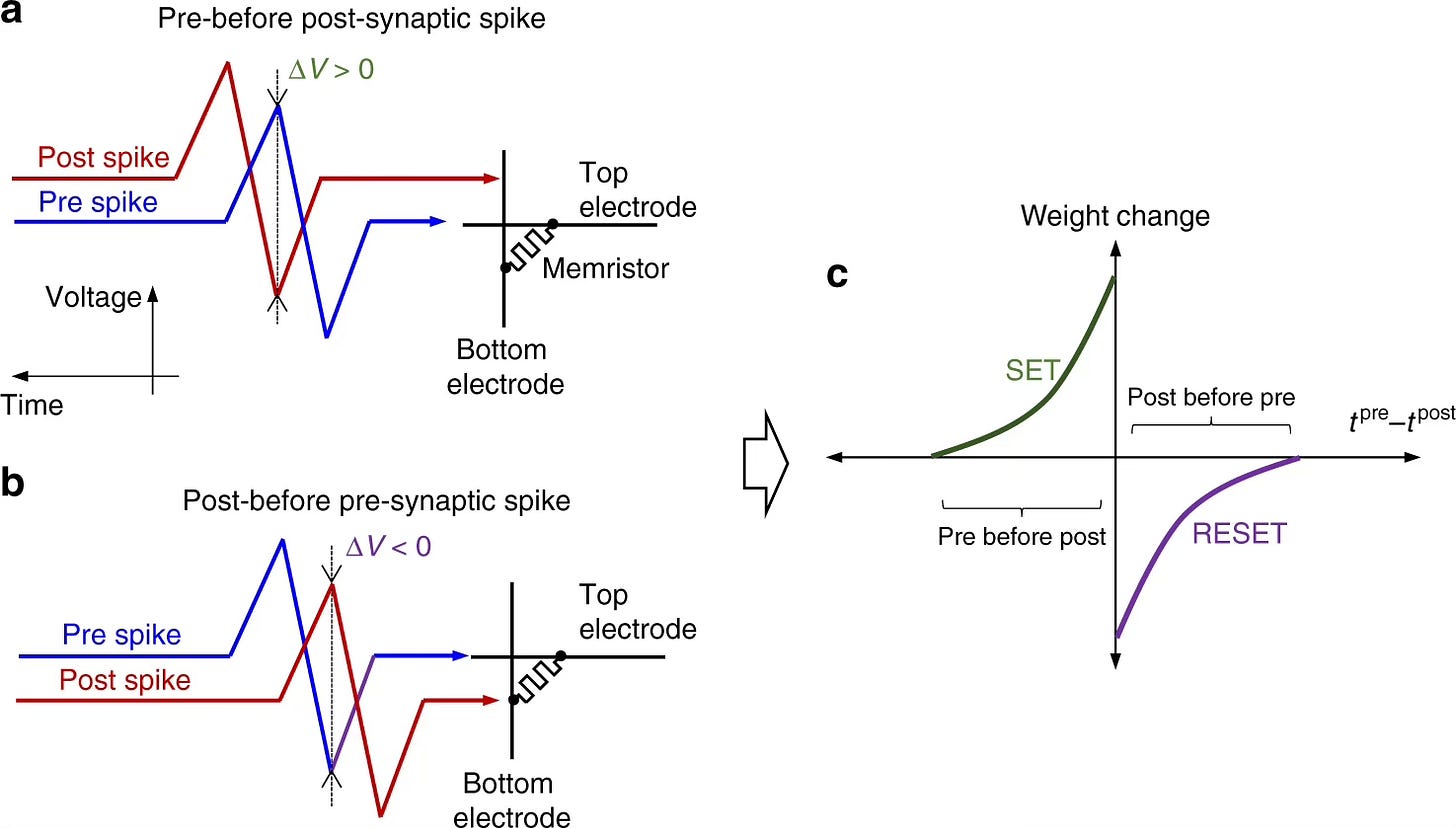

The brain is believed to learn via hebbian learning. Hebbian learning is a concept from neuroscience that describes a form of synaptic plasticity where the strength of a synapse is modified based on the correlated activity of pre-synaptic and post-synaptic neurons, i.e. the neurons connected via a synapse. Proposed by Donald Hebb in 1949, the hebbian theory states: "Cells that fire together, wire together." In other words, if a synapse repeatedly participates in the firing of the pre-synaptic neuron and the activation of the post-synaptic neuron, the strength of that synapse increases. This concept is used in spike-timing-dependent plasticity (STDP), a biological learning rule where the strength of a synapse is modified based on the precise timing of pre-synaptic and post-synaptic spikes. STDP is a fundamental mechanism believed to underlie certain forms of adult learning and memory in biological neural networks. The following figure illustrates the STDP learning process and its effect on the synaptic weights (i.e. the resistance of the memristors). Presynaptic firing followed by a postsynaptic spike will induce potentiation (i.e. a strengthening of the synapse), while postsynaptic firing occurring before presynaptic firing will lead to depression (i.e. weakening of the synapse).

This learning process happens temporal and local and is different in both regards to the learning process in ANNs incorporating backpropagation. STDP is sensitive to the temporal order of spikes and thus enabling the learning of temporal patterns and sequences of events. STDP is also local, meaning that weight changes are determined solely based on the activity of the pre-synaptic and post-synaptic neurons involved in a particular synapse. Unlike global learning rules, like backpropagation, where information from distant neurons influences synaptic changes, STDP only considers the activity of nearby neurons. This local aspect makes learning very computationally efficient and in combination with its temporality very promising for online learning applications, meaning that a neuromorphic circuit has the ability to dynamically adapt and learn new patterns of data.

To sum up this technical part, if you are interested in how all of this can be translated into practical real-world implementations, I can recommend you the paper by Prezioso et al. (2018). They train a SNN on a 20x20 memristor crossbar array with a variation of the STDP learning algorithm. Super insightful, but also very technical.

Good reads:

👀 Why are Neuromorphics relevant?

🔬 Academic Relevance

Neuromorphics are a promising approach to compute, with the potential to improve power consumption in orders of magnitude. Thus it comes to no surprise that this field has gained, and is gaining a lot of attraction. My colleagues at Industrifonden put together a really well written investment thesis on their take of the future of computing, where they investigate the number of academic publications and patent activity in the broader field of computing (seriously, check their thesis out!). Interestingly, neuromorphic computing is leading for both in terms of compound annual growth rate (CAGR): 55% CAGR for publications and 60% CAGR for patents, beating even quantum computing by nearly the double. If we see similar dynamics regarding university spin-outs compared to quantum computing, then exciting times are ahead to be investing in computational Deep Tech!

📱 Application Relevance

There are different driving forces that might push the adoption of neuromorphic architectures in the future. To sum up the potential benefits:

In-memory computing and thus “solving” the memory bottleneck

Extreme low power consumption

Real-time processing and inference

Parallel-processing and pattern recognition capabilities

Adaptability and online learning

It is quite obvious that those characteristics make neuromorphic devices an interesting contender for (maybe even dominant) market share in edge computation and AI, two huge growth markets. A vast range of market verticals would be suitable like IoT devices in manufacturing, hardware for autonomous systems like robotics or automotive, or maybe even your next smartphone (or more likely the one after that) and many more. Mercedes-Benz for example is already using neuromorphic chips from BrainChip in their EQ concept car for speech recognition as of 2022. Anyway, computation in general and AI computation in particular are fully present in our everyday life and thus the potential for suitable hardware doing the actual computational work is nearly endless. The market is also moving in favor of neuromorphic devices because of the growing desire to move from the cloud to the edge, for example due to data safety concerns or improved latency. I won't go into detailed calculations here, but it's safe to say that the relevant markets are well into the triple-digit billions and are continuing to rapidly grow. What in my opinion is unrealistic though, is that neuromorphics will replace von-Neumann architectures entirely. I see them complementing each other, where both architectures can outplay their strengths best, thus neuromorphics might win significant market share, but will never be the only relevant architecture deployed.

Good read:

🧗🏻 Challenges for Adoption

As high as the promises of neuromorphics are, there are equally fierce challenges to be overcome. Neuromorphic circuits are not standard designs like mass-produced von-Neumann type chips. Thus the diffusion can be expected to be quite slow, especially for the analog type discussed here and it is more likely that we will see digital circuits first. It will take a certain time, until production and supply chain processes are established enough to deliver neuromorphics at scale. Technical challenges will be for example the accuracy of memristive devices due to the nature of analog circuits and their inherent stochastic variations. The - in my opinion - biggest challenge is on the software side though. We will need tailored algorithms and software to make those architectures run efficiently and it is to be expected that adoption for developers will be complex. In the end, neuromorphics will not solely compete against Nvidia’s chips, but also against their software suite CUDA, which allows developers and users alike to make easy use of the parallel computing capabilities of Nvidia’s chips. The role that CUDA plays for Nvidia's current market power in the AI accelerator sector is unfortunately often underestimated, if not forgotten completely.

Good reads:

🏁 Concluding Remarks

For those of you that are now hooked on neuromorphics like I am, check out these start-ups that are changing the way we compute:

Ferroelectric Memory Company - ferroelectric memristors

Synthara - memory agnostic in-memory computation

Axelera AI - digital in-memory computation

Innatera - analog/digital neuromorphic processor

Fractile - analog in-memory computation

And that brings us to the end of the 2nd episode of Deep Tech Demystified. I hope you enjoyed this type of in-depth content! If you don’t want to miss the next content drop click below:

If you are a founder or a scientist that is building in the field of neuromorphic hardware and/or software, please reach out to me via mail (felix@playfair.vc) or Linkedin, I would love to have a chat! Cheers and until next time!